Introduction

Physical systems are described by differential equations. Newton’s second law, Electromagnetism, Circuits and Schrodinger’s equations are just a few examples. Differential equations are defined by their order: the highest derivative. Additionally a differential equation of some order ![]() can be converted to a system of

can be converted to a system of ![]() first order coupled differential equations and in general can be written as

first order coupled differential equations and in general can be written as

(1) ![]()

(2) ![]()

where ![]() is a

is a ![]() long vector known as the state vector,

long vector known as the state vector, ![]() is the input vector (for example think of a muliport network that can be excited by applying voltages to its inputs.

is the input vector (for example think of a muliport network that can be excited by applying voltages to its inputs. ![]() is the system output, which is a function of

is the system output, which is a function of ![]() .

.

For many electrical engineering applications, the system of differential equations is built from the relations between current and voltages (![]() ).

). ![]() ,

, ![]() and

and ![]() are almost always linear and do not change with time (time invariant). For circuits (and many other engineering systems) (1) takes the form

are almost always linear and do not change with time (time invariant). For circuits (and many other engineering systems) (1) takes the form

(3) ![]()

and

(4) ![]()

where now ![]() and

and ![]() are matrices.

are matrices.

Although the laws of physics in the language of differential equations, they have been obtained from observing and measuring quantities over short but non-infinitesimal intervals of times. Therefore, it is sometimes useful to go back from the differential equations to the underlying difference system of equations. Now instead of talking about instantaneous rate of changes, we talk about average rates. Integrating (3) over some time interval ![]() leads to

leads to

(5) ![]()

In the above equation ![]() and

and ![]() are the average values of

are the average values of ![]() and

and ![]() over

over ![]() . If

. If ![]() and

and ![]() are sufficiently smooth function then

are sufficiently smooth function then

(6) ![]()

(7) ![]()

where ![]() . Therefore (5) becomes

. Therefore (5) becomes

(8) ![]()

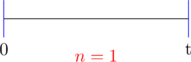

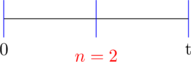

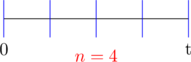

In general if we know an initial state ![]() at time

at time ![]() then we divide the interval

then we divide the interval ![]() into

into ![]() sub-intervals with an increment of

sub-intervals with an increment of ![]() (see the figure above). This allows us +(in principle) to find

(see the figure above). This allows us +(in principle) to find ![]() at

at ![]() . In general if we labelled the state vector at each time instant by the increment

. In general if we labelled the state vector at each time instant by the increment ![]() then

then

(9)

where now ![]() is calculated at

is calculated at ![]() : the mid point of the interval

: the mid point of the interval ![]() . For simplicity we define

. For simplicity we define ![]() and

and ![]() to be

to be

(10) ![]()

and

(11) ![]()

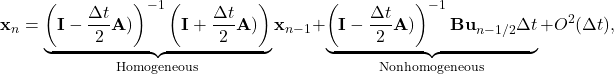

This means that we can write (9) in terms of the newly defined matrices as

(12) ![]()

Evolution of the state vector over time.

Equation (12) and the Figure above reveals the mechanism at which the state vector ![]() evolves over time.

evolves over time. ![]() evolves from the previous state

evolves from the previous state ![]() through two basic linear operations: (1) Linearly transforming the state

through two basic linear operations: (1) Linearly transforming the state ![]() by the application of the linear operator

by the application of the linear operator ![]() and in addition (2) the linear mapping of the input vector

and in addition (2) the linear mapping of the input vector ![]() and the event

and the event ![]() .

.

As we will see later, the two transformations mainly depend on the properties of the system matrix ![]() . In the next section, the input is turned off (i.e,

. In the next section, the input is turned off (i.e, ![]() ). Therefore we will be interested in the behaviour of the matrix

). Therefore we will be interested in the behaviour of the matrix ![]() .

.

Homogeneous case:

In this case

(13) ![]()

or

(14) ![]()

We Let ![]() ,

, ![]() . We also note that the \textbf{exponential} of a matrix

. We also note that the \textbf{exponential} of a matrix ![]() is defined to be

is defined to be

(15) ![]()

For our purposes it is sufficient to say that such matrix sequence converges. Therefore, if we divide the time interval ![]() into an infinitely many sub-intervals one gets (

into an infinitely many sub-intervals one gets (![]() approaches

approaches ![]() as

as ![]() increases).

increases).

(16) ![]()

Nonhomogeneous case,

In this case we use the general form (9) and apply it iteratively at the time steps ![]() . Note that at each time step

. Note that at each time step ![]() depends on the previous value

depends on the previous value ![]() and

and ![]() . This means that

. This means that ![]() depends on

depends on ![]() and \emph{all} previous inputs

and \emph{all} previous inputs ![]() at

at ![]() . The dependency of

. The dependency of ![]() on all the previous input values is nothing but the \textbf{convolution} operation. In fact it is easy to show that

on all the previous input values is nothing but the \textbf{convolution} operation. In fact it is easy to show that

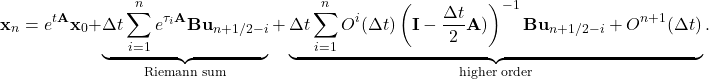

(17) ![]()

Note that

![Rendered by QuickLaTeX.com \[\left[\left(\mathbf{I}-\frac{\Delta t}{2}\mathbf{A})\right)^{-1}\left(\mathbf{I}+\frac{\Delta t}{2}\mathbf{A})\right)\right]^{i-1}=e^{\tau_i\mathbf{A}}+\underbrace{O^i(\Delta t)}_\textnormal{remainder}.\]](http://samehelnaggar.ca/wp-content/ql-cache/quicklatex.com-574b02cf01f46af2212c09d0cb0b519e_l3.png)

Therefore (17) becomes

(18)

Note that ![]() above is sampled at the points

above is sampled at the points ![]() .

.

If we divide the interval ![]() to infinitely many sub-intervals, i.e, letting

to infinitely many sub-intervals, i.e, letting ![]() . Therefore,

. Therefore,

(19) ![]()

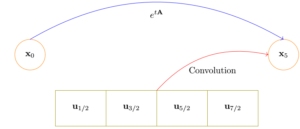

Convolution operator maps the inputs to the states.

The figure above shows how the state at final time ![]() is related to the initial state

is related to the initial state ![]() and the history of the input

and the history of the input ![]() . This is the convolution picture, where the dependency on the states has been encapsulated in the convolution operation.

. This is the convolution picture, where the dependency on the states has been encapsulated in the convolution operation.